I was pleased to see David Pogue’s positive review of the new Windows Phone, Nokia’s Lumia 900, a couple of weeks ago in the New York Times. Windows Phone has made great progress these past couple of years, and has advanced a beautiful and fresh design language, Metro, which we see being adopted all around Microsoft. (I’ve been a big advocate for Metro language and principles in my own part of the company, Online Services.) Pogue’s only real complaint is that apps for Windows Phone are still thinner on the ground than on iPhone and Android— though as he points out, what really matters is whether the important, great and useful apps are there, not whether the total number is 50,000 or 500,000. Many apps doesn’t necessarily imply many quality apps, and most of us have gotten over last decade’s “app mania” that inspired one to fill screen after screen with run-once wonders.

What really made me smile was Pogue’s characterization of what those important apps are, in his view. After reeling off a few of the usual suspects— Yelp, Twitter, Pandora, Facebook, etc.— he added:

Plenty of my less famous favorites are also unavailable: Line2, Hipmunk, Nest, Word Lens, iStopMotion, Glee, Ocarina, Songify This.

Even Microsoft’s own amazing iPhone app, Photosynth, isn’t available for the Lumia 900.

I’ve also been asked (a number of times) about Photosynth for Windows Phone... hang in there. A nice piece of news we’ve just announced, however, is a new app for Windows Phone that I hope will join Pogue’s pantheon, and that is considerably more advanced than its counterparts on other devices: Translator. Technically this isn’t a new app, but an update, though the update is far more functional than its predecessor.

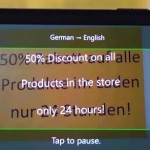

Translator has offline language support, meaning that if you install the right language pack you can use it abroad without a data connection (essential for now, I wish international data were a problem of the past). It also has a nice speech translation mode, but what’s perhaps most interesting is the visual mode. Visual translation is really helpful when you’re encountering menus, signs, forms, etc., and is especially important when you need to deal with character sets that you not only can’t pronounce, but can’t even write or type (that would be Chinese).

Word Lens, mentioned by Pogue, was one of our inspirations in developing the new Translator. What’s impressive about Word Lens is its ability to process frames from the camera at near-video speed, reading text, generating word-by-word translations, and overlaying those onto the video feed in place of the original text. This is quite a feat, near the edge of achievability on current mobile phone hardware. In my view it’s also one of the first convincing applications of augmented reality on a phone. However, the approach suffers from some inherent drawbacks. First, the translation is word-by-word, which often results in nonsensical translated texts. Second, there isn’t quite enough compute time to do the job properly in just one frame, yielding a somewhat sluggish feel; on the other hand the independent processing of each frame is wasteful and often makes words flicker in and out of their correct translations, just a bit too fast to follow. For me, these things make Word Lens a good idea, and better than nothing in a pinch, but imperfect.

The visual translation in Translator takes a different approach. It exploits the fact that the text one is aiming at is printed on a surface and is generally constant. What needs to be done frame-by-frame, then, is to lock onto that surface and track it. This is done using Photosynth-like computer vision techniques, but in realtime, a bit like the video tracking in our TED 2010 demo. Selected, stabilized frames from that video can then be rectified and the optical character recognition (OCR) can be done on them asynchronously— that is, on a timescale not coupled to the video framerate. We can do a better job of OCR and translation, using a language model that understands grammar and multi-word phrases. Then, the translated text can be rendered onto the video feed in a way that still tracks the original in 3D. This solves a number of problems at once: improving the translation quality, avoiding flicker, improving the frame rate, and avoiding superfluous repeated OCR. It’s a small step toward building a persistent and meaningful model of the world seen in the video feed and tracking against it, instead of doing a weaker form of frame-by-frame augmented reality. The team has done a really beautiful job of implementing this approach, and the benefits are palpable in the experience.

Use this app on your next visit to China! I’d love to read comments and suggestions from anyone trying Translator out in the field.